Machine learning is quickly taking its well-deserved place in enriching Big Data applications through classification of facts, identifying trends, and proposing actions based on massive profile comparisons.

Started out as a rather static workflow, first-generation machine learning has covered the following steps: 1) select salient features in the objects considered, 2) cleaning the collections to avoid statistical over/under fitting, and 3) a CPU intensive search to fit a model. A next challenge is to close this workflow loop and go after real-time learning and model adaptations.

This brings into focus a strong need to manage large collections of object features, avoiding their repetitive extraction from the raw objects, controlling the iterative learning process, and administering algorithmic transparency for post decision analysis. These are the realms in which database management systems excel. Their primary role in this world has so far mostly been limited to administration of the workflow states, e.g. administrating training sets used and conducting distributed processing.

However, should the two systems be considered separated entities, where application glue is needed to exchange information? How would such isolated systems be able to arrive at the real-time learning experience required?

Inevitably, machine-learning applications will face the volume, velocity, variety and veracity challenges of big data applications.

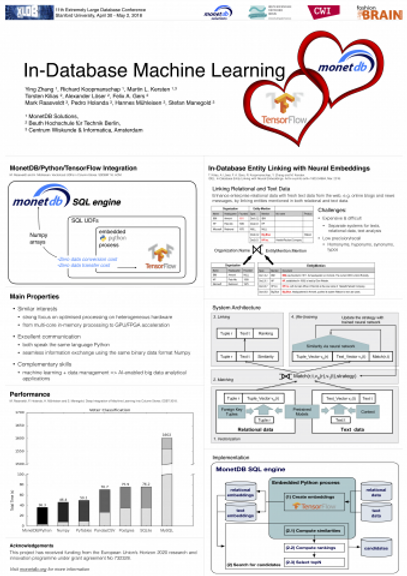

Fortunately, the database community has been spending a tremendous effort on improving the analytical capabilities of database systems and improving the software eco-system at large. So when the well-established analytical database system MonetDB met the popular new member of the machine-learning family TensorFlow, it was love at first sight. The click is immediate: they both can speak the same language (i.e. Python); they both have a strong focus on optimised processing on heterogeneous hardware (e.g. from multi-core in-memory processing to GPU/FPGA acceleration); they both serve the same communities (i.e. AI-enabled analytical applications); and most importantly this couple started out with a means of seamless communication (i.e. through the same binary data exchange format).

At the Lightning Talks sessions of the 34th IEEE International Conference on Data Engineering (ICDE2018) and the 11th Extremely Large Database Conference (XLDB2018), we will first tell the story of how the recent in-database machine learning relationship of MonetDB/TensorFlow came about. Then we will argue with two example applications, one in image analysis and another in natural language processing, that this relationship has all the potential to reach a happily-ever-after.

This project has received funding from the FashionBrain project, an European Union’s Horizon 2020 research and innovation programme under the grant agreement No 732328.